This article was written by Limbic Media’s CTO Manjinder Benning and republished with permission from LED Professional Review, an Austrian-based publication for innovators in LED technology.

————————————

Advancements in lighting control technology are allowing for sophisticated interactivity in LED mapping. These new technologies are bridging the gap between lighting control and AI, with the ability to analyze and map data input (such as audio) in real-time. Installations driven by interactive LED control technologies have their place in a variety of application spaces. Manjinder Benning, Founder and CTO of Limbic Media, explains how this new technology works, what its applications are, and what the future of interactive lighting control looks like – not only for end-users, but also for lighting designers and technicians.

Interactivity is a growing feature of consumable technology. Public spaces – from shopping malls to schools, hospitals, and entertainment venues – are increasingly designed with human-centric, interactive approaches. Designers are recognizing the value of interactive technology in driving traffic, educating, healing, and entertaining over platforms that engage and connect people on a multi-sensory level.

This trend has only begun to influence LED applications – and new technologies are making interactive LEDs more sophisticated and accessible than ever before. This article describes the relevance of interactive technology in various industries, the existing state of interactive LED mapping, and outline an autonomous LED mapping technology that expands the current range of interactive LED applications.

Interactive technologies are growing in demand

The digital age has allowed anyone to curate information. With limited resources, millions are able to publish content and connect to global networks. People expect a greater level of participation and control over their digital environments. Much of our non-digital experiences remain unchanged despite this shift in digital experience. As a result, many facets of the real world struggle to stay relevant: Retail centres are losing revenue, university enrolments are declining, and community-centred activities are struggling to survive in the Netflix era.

Interactive technologies are becoming more common across spheres of public and private life to stay relevant and increase revenue:

- Voice-controlled smart hubs are growing in popularity in private residences, creating a common interactive interface for a number of domestic devices.

- Shopping malls are embracing interactive technologies, such as virtual try-on mirrors, interactive marketing displays, interactive LEDs on building facades (Singapore’s Illuma), virtual immersive experiences, and robotics.

- Some theatres are testing multi-sensory experiences by manipulating temperatures, scents, and tactile experiences.

- Education institutions of all levels are introducing more hands-on, interactive learning approaches, such as STEAM.

Implementation of these technologies through public art, entertainment, and education has uncovered many benefits. For participants, multi-sensory input elevates entertainment value, or conversely, calming synesthesia-like effects. It also appeals to various learning styles⁵⁶⁷ in educational settings. Interactive technologies benefit retail-focused spaces by increasing foot traffic and brand loyalty through customer engagement. They also transform under-utilized civic space into social hubs, improving public safety and revitalizing neighbourhoods.

It is clear that interactive, multi-sensory experiences are poised for rapid growth globally. Traditional sectors such as retail, entertainment, and education are struggling to catch up to our world’s digital transformation. These sectors are utilizing interactive technologies to bridge the gap between the digital and physical world. Modern LED technologies play an important but under-utilized role in interactive experiences.

Existing interactive LED technologies are limited

LED technologies have been under-utilized in the interactive marketplace for a number of reasons: interactive LED technology has been limited to simplistic sound-to-light interaction – and even in this application, achieving interactions is a laborious and expensive process.

Traditional sectors such as retail, entertainment, and education are struggling to catch up to our world’s digital transformation. These sectors are utilizing interactive technologies to bridge the gap between the digital and physical world. Modern LED technologies play an important but under-utilized role in interactive experiences.

Until now, interactive LED technology has been largely realized through automatic music-to-light mapping. Driving light fixtures from musical input, known as light organs, was first presented in a 1929 patent: The patent mechanically models light automatically from audio frequencies. A 1989 patent employed electrical resonant circuits to respond to low, medium, and high frequencies. Modern, digital music-to-light mapping systems have a number of advantages over these early systems. Computers can digitally process audio in real-time and extract control signals (energy in certain frequency bins, or tempo, for example) to more meaningfully map lighting schemes.

Some modern lighting control equipment, including hardware and software lighting consoles and VJ software systems, provide designer interfaces to map beat or frequency-based control signals to parameters that modulate lighting. For example, designers can map the amplitude of a 60-100 Hz frequency bin to DMX fixture brightness. This would create a visual “pumping” effect in response to bass.

This paradigm of manually connecting simple control signals – most often derived from the incoming audio signal frequencies—is closely modeled after the original light organ techniques from the 20th century. There has been little innovation in this field since its inception. In addition, mapping light interactions using this method is time-consuming for designers, and as a result, costly for consumers.

Potential beyond music-to-light mapping

Beyond music-to-light mapping for LED systems, there is great potential for other interactive data inputs. There has been an explosion, in recent years, for low-cost sensor technologies coupled with easy-to-use micro-controllers such as Raspberry PI. These technologies are capable of sensing data inputs from physical environments more cheaply, accurately, and easily than previously possible.

In terms of LED interactivity and mapping, data inputs could include:

- Audio

- Voice recognition

- Motion detection

- Data streams (from social media or other live inputs such as weather patterns)

Some commercially-available software products, such as the Isadora system, enable complex input/output system building. This allows designers to map a variety of inputs (such as sensors) to multimedia outputs, such as projections or audio effects. Again, using LEDs as output is largely unexplored.

Although very well designed and capable of dealing with complexity, existing systems still require expert designers to inform mappings between inputs and outputs, and to direct visualizations as inputs change and evolve. No existing technology has been capable of autonomously listening to data input, monitoring output, and learning to make intelligent decisions to map LED visuals over time.

This article discusses a new paradigm in interactive LED control: artificially intelligent systems that eliminate the programming expertise, time, and cost required to create advanced interactive LED experiences. Such a system intuitively recognizes distinct input features (from audio or otherwise) in real-time. Input features are mapped according to human-based preferences, without direct human control. This makes interactive LED applications more accessible and less costly to a variety of industries seeking interactive solutions, while elevating user experiences.

LED control that maps inputs and drives output autonomously

Imagine an LED installation that intuitively “listens to” audio or other real-time data input and adapts accordingly, learning over time, with no human intervention. This new approach to interactive LED mapping uses an “intelligent” system based on mainly three key elements.

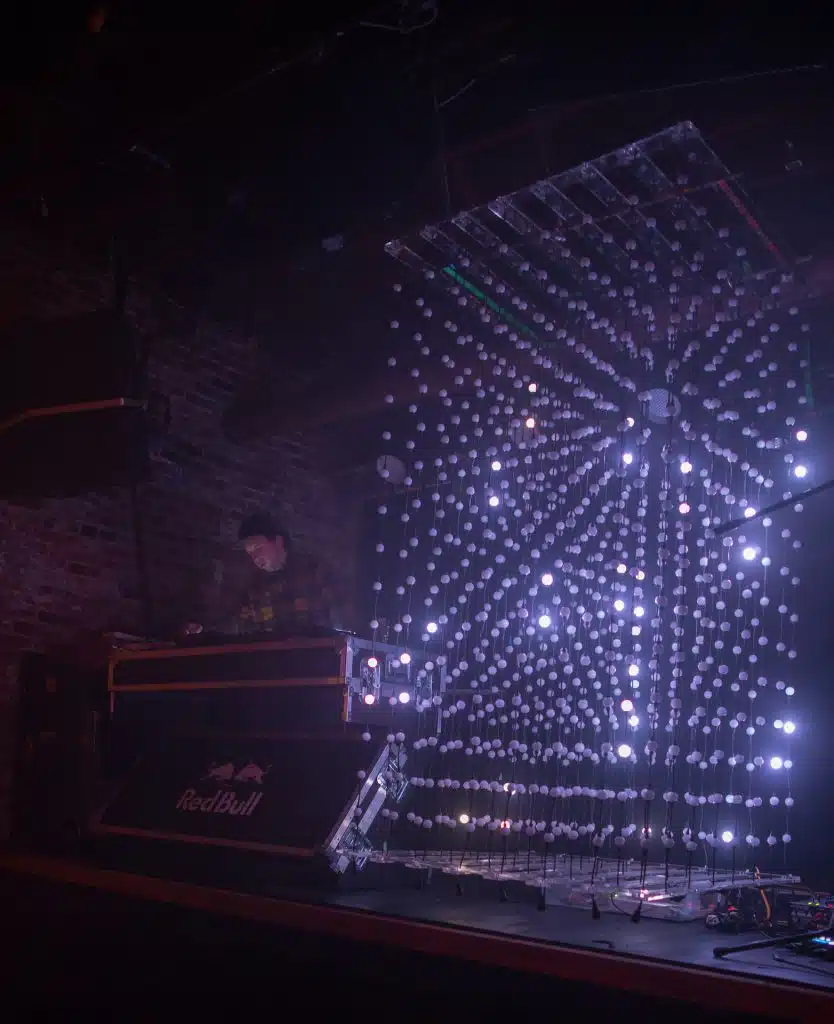

Fig. 1

The system is composed of:

- A temporal correlation unit (110). This acts like a brain, inputting, processing, recording, retrieving, and outputting data. Data inputs can include audio (either from a microphone or line-in audio), motion detection sensor inputs from a camera, data streams (from weather patterns, the stock exchange, tallied votes, or social media, for example), or interfaces that request data input from an audience

- An oscillator (140). This perturbs the inputs, introducing variation to the LED output. This produces light interactions that are lively, dynamic, and less predictable to the viewer

- A signal mixer unit (150). This mixes input signals in various ways to create different outputs

The temporal correlation unit references input signals for distinct features, and determines how the oscillator and signal mixer unit behave in response. The system also determines how the output signal spans through a specific color space.

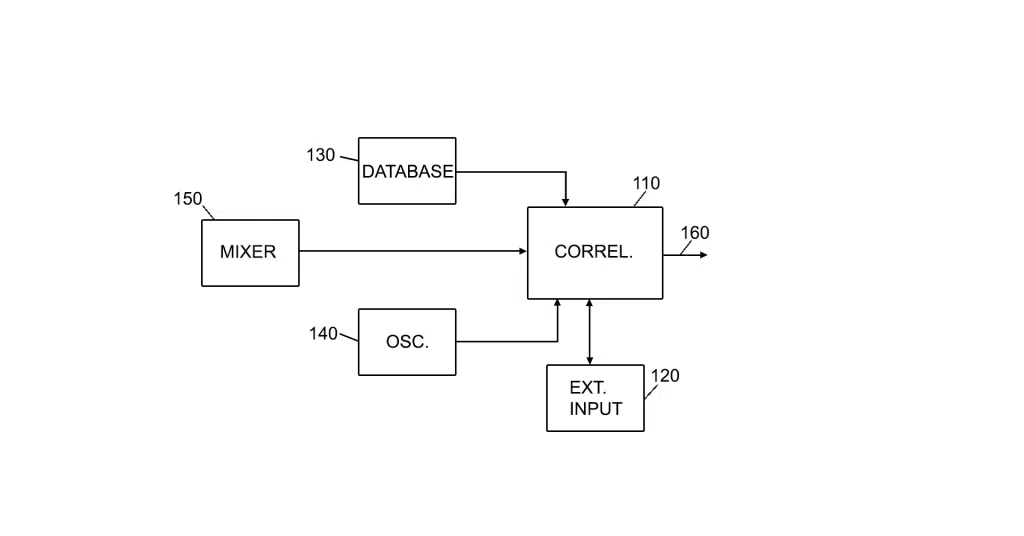

Fig. 2

Figure 3 expands on potential external inputs (120). As with prior technology, the system analyzes binned frequency content (210, 235) and time domain envelopes (215). In addition, the system recognizes and classifies higher-level musical features (220).

Some examples include:

- Percussion/other specific instruments

- Vocal qualities

- Musical genre

- Key

- Dissonance and harmony

- Sentiment

- Transitions (e.g. from verse to chorus)

The system also interprets nonmusical data inputs (225) in real-time. This includes non-musical audio features, such as speech recognition or environmental sounds (rain, wind, lightning, or footsteps), or the other non-audio data inputs previously described.

Features can be reflected as LED-mapped output in many ways. LED parameters such as motion, color palette, brightness, and decay adapt to reflect specific data input features. This creates LED displays that are more intuitively-mapped to human preferences than previous light-mapping technology. The system’s ability to map intuitively and autonomously in real-time heightens the users’ multi-sensory experience and potential for LED interactivity.

Referenced Data Input Determines LED Mapping

An intelligent LED mapping system relies on referenced input signals. The system analyzes new data input for familiar features based on referenced input stored in the temporal correlation unit. Over time, the system optimizes database searches. This allows it to predict input features from audio or other data streams, and create a more intuitive, real-time visual LED output on its own.

The system’s ability to map intuitively and autonomously in real-time heightens the users’ multi-sensory experience and potential for LED interactivity.

When the temporal correlation unit has been adequately trained, it can predict human listeners’ preferences, and map LEDs accordingly for musical, other audio, and non-audio inputs. This system provides a more intuitive, engaging user experience with no need for customized LED programming knowledge or real-time human control:

- The temporal correlation unit trains itself to map output effectively in three ways: The system acts as a neural network by comparing new data inputs to similar inputs stored in the system’s database. New output features are modeled after those of referenced inputs. This allows the system to quickly reference previous lighting output configurations rather than creating them on the fly. Previous technology requires a technician to manually choose which lighting cues to load and when, whereas this system automatically chooses which cues to load and when. Neural networks can also be supervised. In a supervised neural network, the system recognizes specific data input features that indicate audience approval of the LED mapped output. These input features could include: manual switches, face recognition, or voice recognition that indicate emotional states. This serves to further refine the system’s output choices according to human preferences.

- The system can also utilize evolutionary algorithms. Evolutionary algorithms are used in artificially intelligent systems – they are modeled after selection mechanisms found in evolutionary biology (firefly attraction, ant pheromone trail setting, and bird flocks, for example) to optimize data searches.Evolutionary algorithms, such as a genetic algorithm, allow an LED control system to independently find and select the most effective lighting outputs without human control. As with a supervised neural network, the system governed by genetic algorithms seeks specific audience cues that suggest approval of the mapped LED output. This serves as a fitness function, training the temporal correlation unit to respond to real-time input signals. Third way of the system to train itself

- Similarly to evolutionary algorithms, a system can utilize interacting intelligent agents. Agents also mimic natural patterns in code by responding to specific, predefined rules (e.g. a specific frequency produces a certain color space). Each agent applies a set of rules to generate temporal sequences for LED mappings, again seeking audience cues to train the system how to respond appropriately to input.Agent rules can be parametric. For example, rule parameters are determined by the physical arrangement of LEDs in 2D or 3D installations. A suite of techniques known as nature inspired algorithms, which are modeled after naturally occurring patterns, are a credible source for generative content when considering lighting output. This approach works particularly well with large numbers of LED pixels.

Implications of technology for industries and end-users

An intuitive method of mapping LEDs according to human preferences means that multi-sensory, interactive lighting are more immersive and emotive than ever before. An intelligent, autonomous LED control system has many benefits and applications for end-users and various sectors.

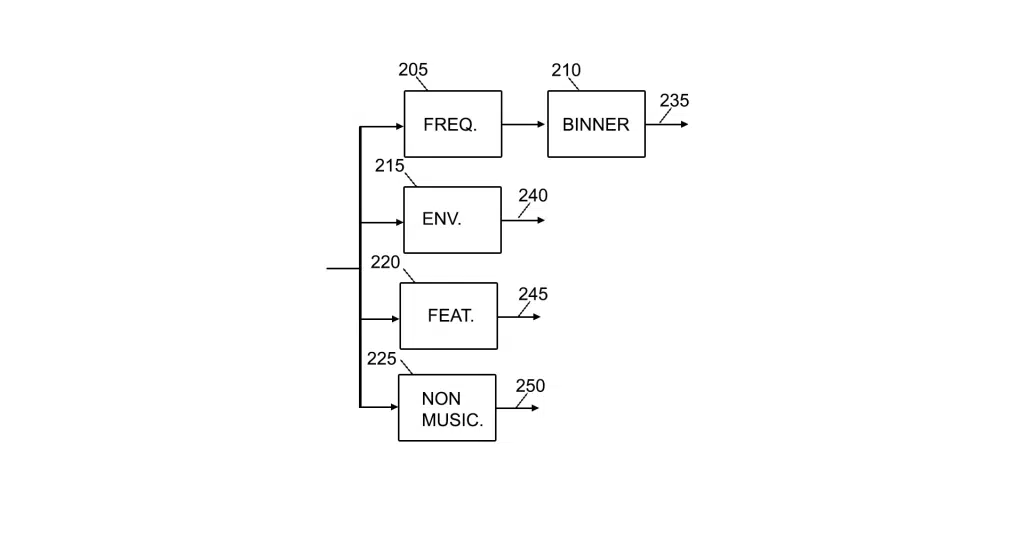

Interactive LED lighting at a climbing gym in Victoria, BC

Benefits for end-users:

- Educational programs can use such systems to leverage multi-sensory, interdisciplinary curriculums that address various learning styles

- Holiday, architectural, and other lighting companies that already employ LED technologies, can use the system to employ a more interactive, human-centric approach to design

- Retail centres can use the system’s interactivity, particularly live social media hashtags as data input, to attract customers and leverage brand presence online

- Cities can incorporate the system in their efforts to revitalize public space by:

- Investing in interactive, public art using LEDs

- Visualizing data gathered through smart city initiatives

- Attracting foot traffic to business areas

- Improving public safety

- Place making and creating community focal points

- Clubs, venues, and AV teams can quickly and effectively create improved visual effects for live performances and DJs

- Public centres and exhibition venues that adhere to redesign cycles can adapt the system with changing data input types and LED configurations to refresh displays year after year

- Non-technical users are able to access sophisticated interactive technology without custom programming or design knowledge

- Users can avoid the time and cost associated with creating and maintaining interactive LED displays

- LED displays can be controlled and scaled across multiple locations at a lower cost

Implications for technicians

It is often assumed that technological advances, particularly using AI, have the potential to destroy jobs. The described system simplifies or removes the programming process, making the technology more accessible and affordable than previous interactive LED technologies – but this does not necessarily imply job obsolescence for lighting designers and technicians. The technology will only change and improve the state of the art in the future, providing a number of benefits for industry professionals.

Benefits for professionals:

- Provides a sophisticated tool for the lighting designers that can be used in conjunction with existing professional lighting protocols such as DMX

- Saves lighting designers programming time

- Allows designers to scale large projects at a lower cost

- Opens the door to a wider variety of LED applications in industries outside the current status quo

- Allows designers to manipulate lighting schemes with data input other than music

- Allows designers to improve or incorporate audience interactivity

————————————

Interactive technologies are poised for global growth, allowing various industries to offer engaging, multi-sensory experiences in non-digital settings. Applying interactivity to LED technologies opens a variety of doors into a number of sectors looking to attract, engage, and educate communities in settings that struggle to stay relevant in our digital world.

Until recent advancements in LED control technology, mapping data input to lighting design has been limited to audio input using age-old light organ techniques. While low-cost and easy to use micro-controllers such as Raspberry PI have opened new doors in LED mapping, the process still requires skilled lighting designers and programmers. The cost and time associated with creating and maintaining interactive LED displays using these methods has made interactive LED applications costly and inaccessible to a variety of industries and audiences.

A new technology, outlined in “System and Method for Predictive Generation of Visual Sequences,” addresses these barriers to new LED applications by controlling LED interactivity autonomously yet elegantly. The system analyzes data input, including music, non-musical audio, and non-audio data streams for distinct input features. Input features are mapped into distinct LED output parameters based on human preferences, and indexed into the system’s database. This indexing allows the system to autonomously predict upcoming data input and intelligently refine its output over time.

The system’s design avoids the need for timely human programming and maintenance, creates LED mapping that looks aesthetically detailed and intuitive, and allows real-time interaction from a variety of data inputs. This has clear benefits to the LED lighting industry: it opens doors to new applications in various sectors seeking interactive solutions for consumers. It creates heightened multi-sensory, end-user experiences. It offers a sophisticated tool for lighting technicians and professional designers.

References:

[5] Johnson, Gretchen L., and Edelson, Jill R.. “Integrating Music and Mathematics in the Elementary Classroom.” Teaching Children Mathematics, Vol. 9, No. 8, April 2003, pp. 474-479.

[6] Wilmes, Barbara, Harrington, Lauren, Kohler-Evans, Patty, and Sumpter, David. “Coming to Our Senses: Incorporating Brain Research Findings into Classroom Instruction.” Education, Vol. 128, No. 4, Summer 2008, pp. 659-666.

[7] Kast, Monika, Baschera, Gian-Marco, Gross, Markus, Jäncke, Lutz & Meyer, Martin. “Computer-based learning of spelling skills in children with and without dyslexia.” Annals of Dyslexia, 12 May, 2011, DOI: 10.1007/s11881-011-0052-2